The Battle for Safe Superintelligence: Ilya Sutskever's Return

Last year, Ilya Sutskever was the name everyone associated with genius in the AI world. As a co-founder of OpenAI and a key figure in the creation of the groundbreaking AlexNet convolutional neural network with Geoffrey Hinton, Ilya was one of the most respected AI researchers of our time. People often said that OpenAI was nothing without its people, and Ilya was a cornerstone. However, his career took a dramatic turn when he experienced a rapid fall from grace.

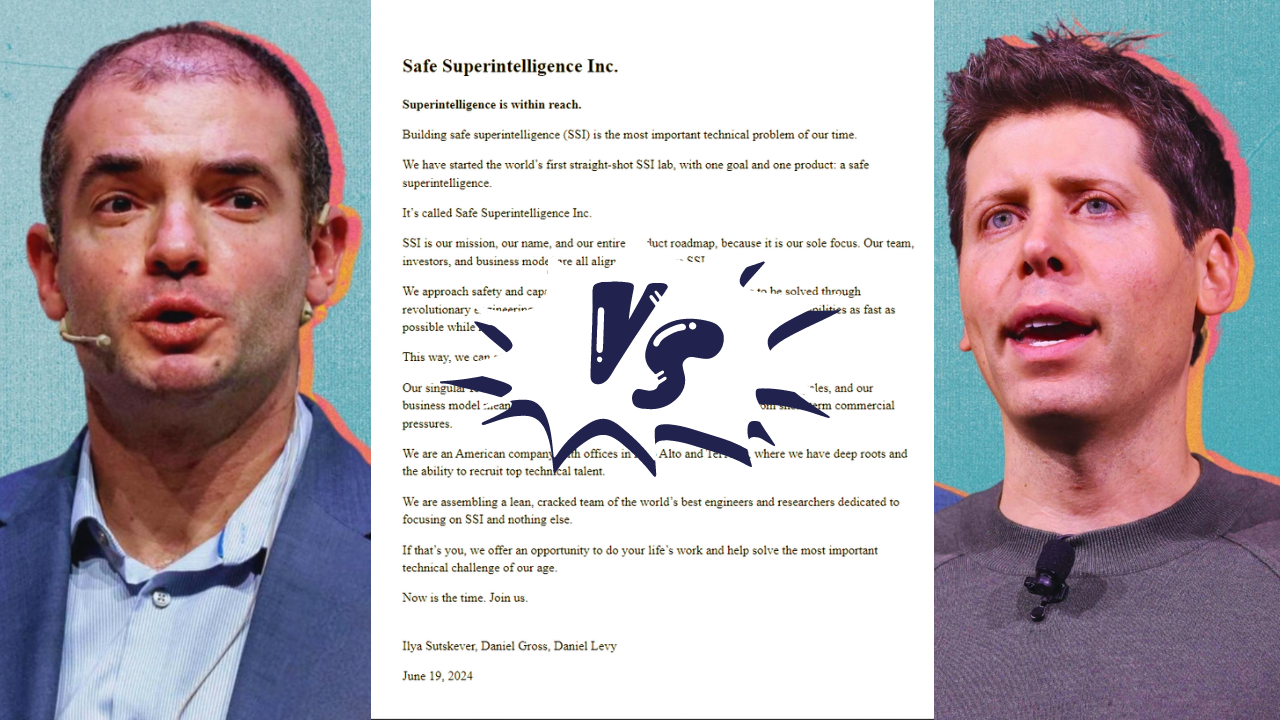

In a shocking twist, it was revealed that Ilya was one of the board members who voted to fire Sam Altman as CEO of OpenAI. This move was supposedly to save humanity from what Ilya saw as Sam’s reckless techno-capitalist ambitions. However, this betrayal backfired spectacularly. Altman quickly regained his position, becoming even more powerful, while Ilya was branded a villain and mysteriously disappeared from the public eye.

It seemed like Ilya’s career was over, but yesterday he reemerged with a big announcement. On June 20th, 2024, Ilya introduced a new startup called Safe Super Intelligence (SSI), with offices in Palo Alto and Tel Aviv. This company aims to develop superintelligence that is safe for humanity. But before we dive into why this might be a dubious goal, let's appreciate SSI’s website. Remarkably, it operates without any JavaScript, Tailwind, TypeScript, or Next.js—just five lines of CSS and some HTML. The design is so simple and elegant, it almost seems like it was created by some kind of superintelligence.

But what is artificial superintelligence (ASI)? ASI is a hypothetical form of intelligence that would surpass human capabilities by far. Think about how we view ants: they are intelligent in their own right but can't grasp human concepts like technology or literature. If ASI comes into existence, it might see us with a similar gap in intelligence. This could be extremely dangerous, as the ASI might regard humans the way we regard ants—insignificant and expendable.

Yet, we haven’t even achieved artificial general intelligence (AGI) yet. AGI would have human-like intelligence, capable of learning new skills across various domains. The closest we have are multimodal large language models like GPT-4 and Gemini, which process human-created data but aren't solving new scientific problems or creating new art. Despite their limitations, these models have massive commercial potential. Recently, Sam Altman hinted at transforming OpenAI into a fully for-profit company, moving away from its current capped-profit structure.

This potential shift has led to skepticism about OpenAI’s true openness. Elon Musk, another co-founder, previously filed a lawsuit against OpenAI, alleging a betrayal of its founding mission. Although he recently dropped the lawsuit, it highlighted ongoing tensions within the AI community.

Now, let’s look at SSI. Is it a genuine venture, a publicity stunt, or something more sinister? The website reveals that Daniel Gross, a prolific AI investor known for backing Magic.dev, is a co-founder. With Ilya and Daniel at the helm, SSI has the potential to attract top talent globally. However, their announcement lacked any groundbreaking revelations, relying instead on their reputations and promises to achieve ASI while skipping AGI altogether.

Interestingly, Nvidia, now the world’s most valuable company, stands to benefit significantly from SSI’s aspirations, potentially selling trillions of dollars worth of H100s to the new startup. Despite the hype, SSI remains speculative until it can deliver tangible results.

A darker theory also emerges when considering the name SSI. In 1974, John C. Lilly, known for developing the float tank and communicating with dolphins, wrote about "Solid State Intelligence" (SSI)—a malevolent entity created by humans that evolves into an autonomous bioform. Given Ilya’s title as chief scientist at OpenAI, the parallels are eerie.

When a company claims to be "super safe," it often suggests the opposite. Historically, entities labeled as trustworthy or healthy often prove to be the contrary. Currently, militaries worldwide use AI for targeted strikes, which some argue is preferable to harming civilians. However, the real fear with superintelligence is not rogue AI turning against humanity but falling into the wrong hands, leading to catastrophic outcomes akin to those depicted in Terminator.

Thank you for reading! Let's continue the conversation about the future of AI and its impact on our world.

Interesting 💯💯

Great news

Excited to watch the new battle

Let’s hope SSI can prove their commitment to safety